MCD estimators

The MCD estimator works as follows:

Fix an integer h such that where [ \; ] denotes the integer part. The preferred choice of h for outlier detection is its lower bound, which yields the breakdown value [(n+v+1)/2]/n. Let \hat \mu_{MCD} and \hat \Sigma_{MCD} be the mean and the covariance matrix of the subset of h observations for which the determinant of the covariance matrix is minimal. \hat \mu_{MCD} is defined to be the MCD estimator of \mu, whereas the MCD estimator of \Sigma is proportional to \hat \Sigma_{MCD}.

The proportionality constant is chosen to achieve consistency at the normal model. It was derived by Butler et al. (1993) and by Croux and Haesbroeck (1999) as

c_{MCD}(h,n,v)=\frac{h/n}{P\Big(X_{v+2}^2 < \chi_{v,h/n}^2 \Big)}The MCD is used because it has rate of convergence n^{−0.5}, unlike the minimum volume ellipsoid estimator (Davies, 1992) for which convergence is at rate n^{-1/3}. Another reason is the fast algorithm of Rousseeuw and Van Driessen (1999), which has been implemented in many languages.

Although consistent at the normal model, the estimator

c_{MCD}(h,n,v)\hat \Sigma_{MCD} is still biased for small sample sizes in the sense that the empirical size is much greater than the nominal one. Pison et al. (2002) showed by Monte Carlo simulation the importance of applying a small sample correction factor to it to achieve an empirical size much closer to the nominal. Let s_{MCD}(h,n,v) be this factor for a specific choice of n and v. The resulting (squared) robust Mahalanobis distances are then d_{(MCD)i}^2=k_{MCD}(y_i-\hat \mu_{MCD})^T \hat \Sigma_{MCD}^{-1}(y_i-\hat \mu_{MCD}), \quad \quad \quad i=1, \dots,n, where k_{MCD}=\{c_{MCD}(h,n,v) \; s_{MCD}(h,n,v) \}^{-1}. These distances are compared with the \alpha\% cutoff value of their asymptotic \chi^2 distribution with v degrees of freedom, with \alpha in most published reports between 0.01 and 0.05.The exact finite sample distribution of the robust Mahalanobis distances analyzed above is unknown, but Hardin and Rocke (2005) proposed a scaled F-approximation which, in small and moderate samples, outperforms the asymptotic \chi_v^2 distribution approximation of MCD

To increase efficiency, a reweighted version of the MCD estimators is often used in practice. These reweighted estimators, \hat \mu_{RMCD} and \hat \Sigma_{RMCD} are computed by giving weight 0 to observations for which d_{(MCD)i} exceeds a cut-off value. Thus a first subset of h observations is used to select a second subset from which the parameters are estimated. The default choice for this cut-off is

\surd \chi_{v,0.025}^2 \timesBoth the consistency (Croux and Haesbroeck, 2000) and the small sample (Pison et al., 2002) correction factors c_{RMCD}(h,n,v) and s_{RMCD}(h,n,v) can be applied to \hat \Sigma_{RMCD} when the squared robust Mahalanobis distances become

d_{(RMCD-C)i}^2=k_{RMCD-C}(y_i-\hat \mu_{RMCD})^T \hat \Sigma_{RMCD}^{-1}(y_i-\hat \mu_{RMCD}), \quad \quad \quad i=1,\dots,n, with k_{RMCD-C}=\{c_{RMCD}(h,n,v) \; s_{RMCD}(h,n,v) \}^{-1} . The reweighted distances are again compared with their asymptotic \chi_v^2-distribution (Lopuhaä, 1999).

Example 1

The code below loads the heads dataset and launch the MCD outlier detection procedure

% Load the data

load('head');

Y=head{:,:};

% Use function FSM (Forward search in multivariate analysis with automatic outlier detection purposes)

[RAW,REW]=mcd(Y,'plots',1,'conflev',1-0.01/size(Y,1));

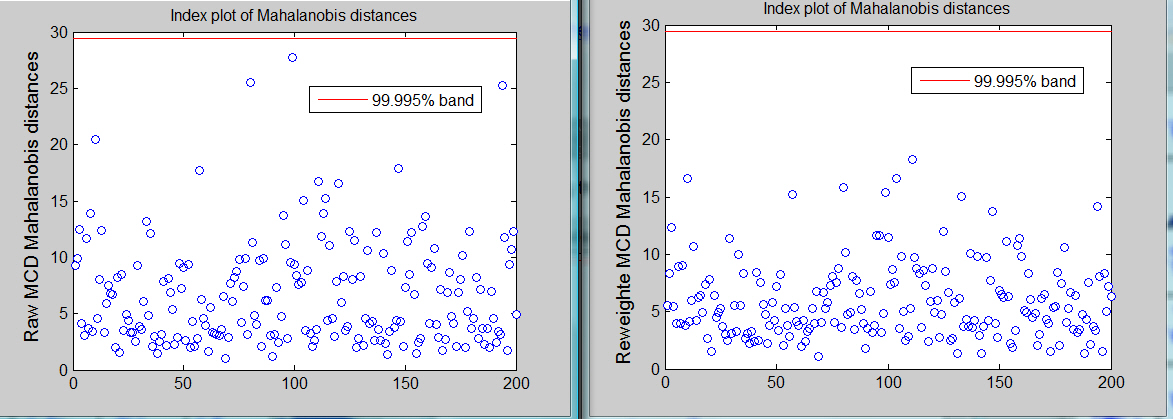

No outlier is declared using the Bonferroni threshold using raw or reweuighted mcd.

Example 2

The code below loads the mussels dataset and performs an automatic outlier detection procedure in the original scale and then in the BoxCox transformed scale.

Analysis on the original scaleload('mussels');

Y=mussels{:,:};

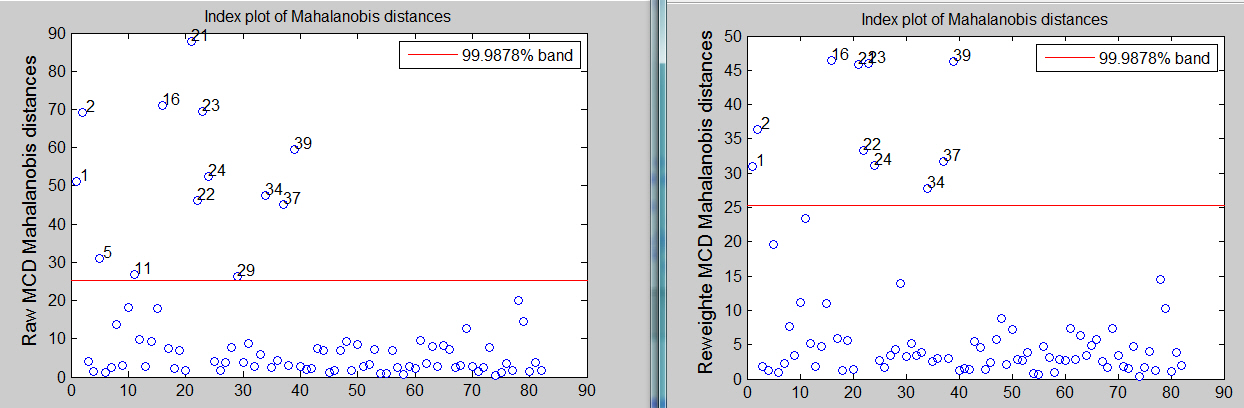

[RAW,REW]=mcd(Y,'plots',1,'conflev',1-0.01/size(Y,1));

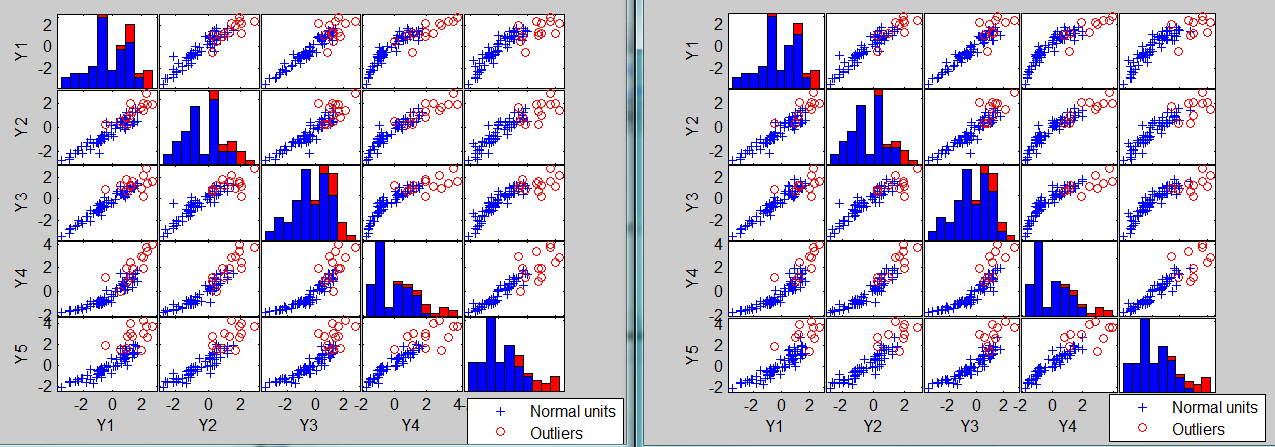

The associated scatter plot matrices with the outliers highlighted, which are produced atuomatically are shown below.

The analysis on the transformed scale is shown below

load('mussels');

Y=mussels{:,:};

la=[0.5 0 0.5 0 0];

v=size(Y,2);

Y=normBoxCox(Y,1:v,la);

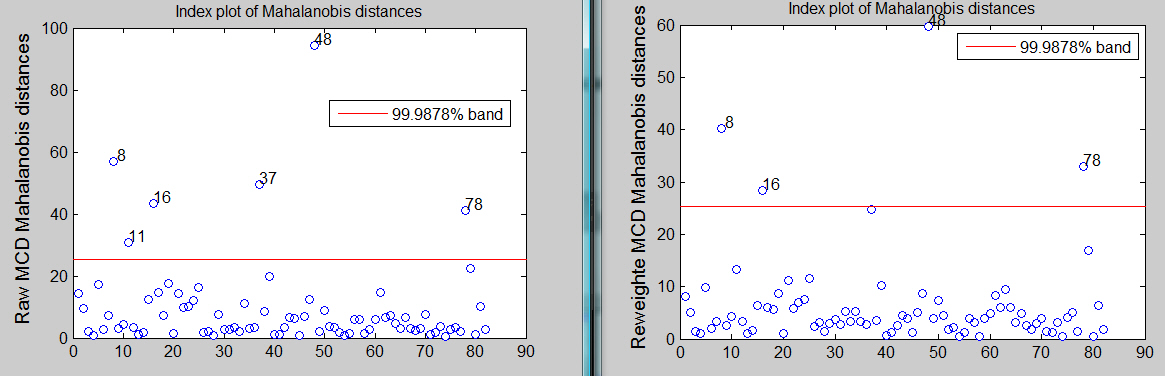

[RAW,REW]=mcd(Y,'plots',1,'conflev',1-0.01/size(Y,1))

The raw MCD seems to declare 6 outliers while the reweighted MCD finds just 4.

You can compare this output with that which comes out from the use of S and MM estimators or those based on the forward search.| Functions |

• The developers of the toolbox• The forward search group • Terms of Use• Acknowledgments