OptimalCuttingFrequency

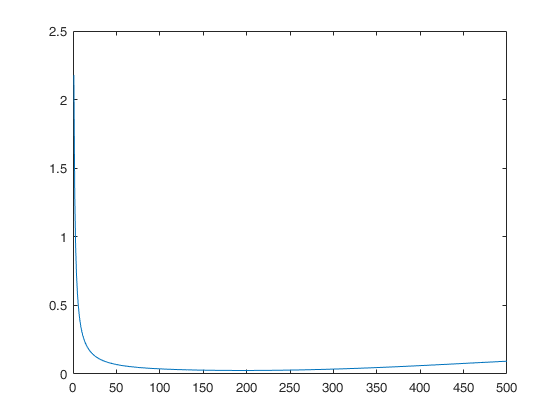

OptimalCuttingFrequency computes the optimal cutting frequency for the Fourier estimator of integrated variance

Syntax

Nopt=OptimalCuttingFrequency(x,t)example

Description

OptimalCuttingFrequency computes the optimal cutting frequency for running the Fourier estimator of the integrated variance on noisy timeseries data.

Examples

Input Arguments

Output Arguments

More About

References

Mancino, M.E., Recchioni, M.C., Sanfelici, S. (2017), Fourier-Malliavin Volatility Estimation. Theory and Practice, "Springer Briefs in Quantitative Finance", Springer.

Sanfelici, S., Toscano, G. (2024), The Fourier-Malliavin Volatility (FMVol) MATLAB toolbox, available on ArXiv.

Computation of the optimal cutting frequency for estimating the integrated variance from a

vector x of noisy observations of a univariate diffusion process.

Computation of the optimal cutting frequency for estimating the integrated variance from a

vector x of noisy observations of a univariate diffusion process.