regressHhar

regressHhar fits a multiple linear regression model with Harvey heteroskedasticity

Description

Examples

regressHhar with all default options.

regressHhar with all default options.

regressHhar with all default options.

regressHhar with all default options.Monthly credit card expenditure for 100 individuals.

% Results in structure "OUT" coincides with "Maximum Likelihood

% Estimates" of table 11.3, page 235, 5th edition of Greene (1987).

% Results in structure "OLS" coincide with "Ordinary Least Squares

% Estimates" of table 11.3, page 235, 5th edition of Greene (1987).

load('TableF91_Greene');

data=TableF91_Greene{:,:};

n=size(data,1);

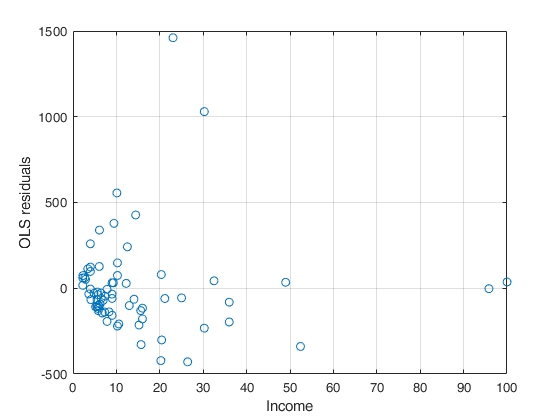

% Linear regression of monthly expenditure on a constant, age, income

% its square and a dummy variable for home ownership using the 72

% observations for which expenditure was nonzero produces the residuals

% plotted below.

X=zeros(n,4);

X(:,1)=data(:,3); % age

X(:,2)=data(:,6); % Own rent (dummy variable)

X(:,3)=data(:,4); % Income

X(:,4)=(data(:,4)).^2; % Income square

y=data(:,5); % Monthly expenditure

% Select the 72 observations for which expenditure was nonzero

sel=y>0;

X=X(sel,:);

y=y(sel);

whichstats={'r','tstat'};

OLS=regstats(y,X,'linear',whichstats);

r=OLS.r;

disp('Ordinary Least Squares Estimates')

LSest=[OLS.tstat.beta OLS.tstat.se OLS.tstat.t OLS.tstat.pval];

disp(LSest)

disp('Multiplicative Heteroskedasticity Model')

% The variables which enter the scedastic function are Income and

% Income square (that is columns 3 and 4 of matrix X).

out=regressHhar(y,X,[3 4]);

% Plot OLS residuals against Income (This is nothing but Figure 11.1 of

% Green (5th edition) p. 216).

plot(X(:,4),r,'o')

xlabel('Income')

ylabel('OLS residuals')

grid onOrdinary Least Squares Estimates -237.1465 199.3517 -1.1896 0.2384 -3.0818 5.5147 -0.5588 0.5781 27.9409 82.9223 0.3370 0.7372 234.3470 80.3660 2.9160 0.0048 -14.9968 7.4693 -2.0078 0.0487 Multiplicative Heteroskedasticity Model

regressHhar with optional arguments.

The data in Appendix Table F6.1 were used in a study of efficiency in production of airline services in Greene (2007a).

% See p. 557 of Green (7th edition).

% Results in structure "out.Beta" coincide with those of

% table 14.3 page 557, 7th edition of Greene (2007).

% (line of the table which starts with MLE)

load('TableF61_Greene');

Y=TableF61_Greene{:,:};

Q=log(Y(:,4));

Pfuel=log(Y(:,5));

Loadfactor=Y(:,6);

n=size(Y,1);

X=[Q Q.^2 Pfuel];

y=log(Y(:,3));

whichstats={'beta', 'r','tstat'};

OLS=regstats(y,X,'linear',whichstats);

disp('Ordinary Least Squares Estimates')

LSest=[OLS.tstat.beta OLS.tstat.se OLS.tstat.t OLS.tstat.pval];

disp(LSest)

% Estimate a multiplicative heteroscedastic model and print the

% estimates of regression and scedastic parameters together with LM, LR

% and Wald test.

out=regressHhar(y,X,Loadfactor,'msgiter',1,'test',true);

Related Examples

FGLS estimator.

FGLS estimator.

FGLS estimator.

FGLS estimator.Estimate a multiplicative heteroscedastic model using just one iteration that is find FGLS estimator (two step estimator).

% Data are monthly credit card expenditure for 100 individuals.

% Results in structure "out" coincide with estimates of row

% "$\sigma^2_i=\sigma^2 \exp(z' \alpha)$" in table 11.2, page 231, 5th edition of

% Greene (1987).

load('TableF91_Greene');

data=TableF91_Greene{:,:};

n=size(data,1);

% Linear regression of monthly expenditure on a constant, age, income and

% its square and a dummy variable for home ownership using the 72

% observations for which expenditure was nonzero produces the residuals

% plotted below.

X=zeros(n,4);

X(:,1)=data(:,3); % age

X(:,2)=data(:,6); % Own rent (dummy variable)

X(:,3)=data(:,4); % Income

X(:,4)=(data(:,4)).^2; % Income square

y=data(:,5); % Monthly expenditure

% Select the 72 observations for which expenditure was nonzero

sel=y>0;

X=X(sel,:);

y=y(sel);

out=regressHhar(y,X,[3 4],'msgiter',1,'maxiter',1);Regression parameters beta

Coeff. SE t-stat

-117.8675 50.4970 -2.3341

-1.2337 1.2707 -0.9709

50.9498 26.3050 1.9369

145.3045 23.0917 6.2925

-7.9383 1.8611 -4.2653

Scedastic parameters gamma from first iteration

Coeff.

4.1374

2.4857

-0.2449

Input Arguments

y — Response variable.

Vector.

A vector with n elements that contains the response variable.

It can be either a row or column vector.

Data Types: single| double

X — Predictor variables in the regression equation.

Matrix.

Data matrix of explanatory variables (also called 'regressors') of dimension (n x p-1). Rows of X represent observations, and columns represent variables.

By default, there is a constant term in the model, unless you explicitly remove it using option intercept, so do not include a column of 1s in X.

Data Types: single| double

Z — Predictor variables in the skedastic equation.

Matrix.

n x r matrix or vector of length r.

If Z is a n x r matrix it contains the r variables which form the scedastic function as follows:

\[ \sigma^2_i = exp(\gamma_0 + \gamma_1 Z_{i1} + ...+ \gamma_{r} Z_{ir}). \] If Z is a vector of length r it contains the indexes of the columns of matrix X which form the scedastic function as follows \[ \sigma^2_i = exp(\gamma_0 + \gamma_1 X(i,Z(1)) + ...+ \gamma_{r} X(i,Z(r))) \]Therefore, if, for example, the explanatory variables responsible for heteroscedasticity are columns 3 and 5 of matrix X, it is possible to use both the syntax regressH(y,X,X(:,[3 5])) or the syntax regressH(y,X,[3 5]).

Remark: Missing values (NaN's) and infinite values (Inf's) are allowed, since observations (rows) with missing or infinite values will automatically be excluded from the computations.

Data Types: single| double

Name-Value Pair Arguments

Specify optional comma-separated pairs of Name,Value arguments.

Name is the argument name and Value

is the corresponding value. Name must appear

inside single quotes (' ').

You can specify several name and value pair arguments in any order as

Name1,Value1,...,NameN,ValueN.

'intercept',false

, 'initialbeta',[3.6 8.1]

, 'initialgamma',[0.6 2.8]

, 'maxiter',8

, 'tol',0.0001

, 'msgiter',0

, 'nocheck',true

, 'test',false

intercept

—Indicator for constant term.true (default) | false.

Indicator for the constant term (intercept) in the fit, specified as the comma-separated pair consisting of 'Intercept' and either true to include or false to remove the constant term from the model.

Example: 'intercept',false

Data Types: boolean

initialbeta

—initial estimate of beta.vector.

p x 1 vector. If initialbeta is not supplied (default), standard least squares is used to find initial estimate of beta.

Example: 'initialbeta',[3.6 8.1]

Data Types: double

initialgamma

—initial estimate of gamma.vector.

vector of length (r+1). If initialgamma is not supplied (default), initial estimate of gamma is nothing but the OLS estimate in a regression where the response is given by squared residuals and the regressors are specified in input object Z (this regression also contains a constant term).

Example: 'initialgamma',[0.6 2.8]

Data Types: double

maxiter

—Maximum number of iterations to find model parameters.scalar.

If not defined, maxiter is fixed to 200. Remark: in order to obtain the FGLS estimator (two step estimator) it is enough to put maxiter=1.

Example: 'maxiter',8

Data Types: double

tol

—The tolerance for controlling convergence.scalar.

If not defined, tol is fixed to 1e-8. Convergence is obtained if $||d_{old}-d_{new}||/||d_{new}||<1e-8$ where d is the vector of length p+r+1 which contains regression and scedastic coefficients $d=(\beta' \gamma')'$; while $d_{old}$ and $d_{new}$ are the values of d in iterations t and t+1 t=1,2, ..., maxiter

Example: 'tol',0.0001

Data Types: double

msgiter

—Level of output to display.scalar.

If msgiter=1, it is possible to see the estimates of the regression and scedastic parameters together with their standard errors and the values of Wald, LM and Likelihood ratio test, and the value of the maximized loglikelihood. If msgiter>1, it is also possible to see monitor the estimates of the coefficients in each step of the iteration. If msgiter<1, nothing is displayed on the screen.

Example: 'msgiter',0

Data Types: double

nocheck

—Check input arguments.boolean.

If nocheck is equal to true no check is performed on matrix y and matrix X. Notice that y and X are left unchanged. In other words, the additional column of ones for the intercept is not added. As default nocheck=false.

Example: 'nocheck',true

Data Types: boolean

test

—Test statistics.boolean.

If input option test is true Wald, Likelihood ratio, Lagrange multiplier test together with values of maximized log likelihood are given. The default is false, that is no test is computed.

Example: 'test',false

Data Types: boolean

Output Arguments

out — description

Structure

T consists of a structure 'out' containing the following fields

| Value | Description |

|---|---|

Beta |

p-by-3 matrix containing: 1st col = Estimates of regression coefficients; 2nd col = Standard errors of the estimates of regr coeff; 3rd col = t-tests of the estimates of regr coeff. |

Gamma |

(r+1)-by-3 matrix containing: 1st col = Estimates of scedastic coefficients; 2nd col = Standard errors of the estimates of scedastic coeff; 3rd col = t-tests of the estimates of scedastic coeff. Remark: the first row of matrix out.Gamma is referred to the estimate of \( \sigma^2 \). In other words \[ \hat \sigma^2= \exp(\gamma_1) \] |

typeH |

'har'. This output is necessary if function forecastH is called. |

WA |

scalar. Wald test. This field is present only if input option test is true. |

LR |

scalar. Likelihood ratio test. This field is present only if input option test is true. |

LM |

scalar. Lagrange multiplier test. This field is present only if input option test is true. |

LogL |

scalar. Complete maximized log likelihood. This field is present only if input option test is true. |

More About

Additional Details

This routine implements Harvey's (1976) model of multiplicative heteroscedasticity which is a very flexible, general model that includes most of the useful formulations as special cases.

The general formulation is: $\sigma^2_i =\sigma^2 \exp(z_i \alpha)$ Let $z_i$ include a constant term so that \( z_i'=(1 \; q_i) \) where \( q_i \) is the original set of variables which are supposed to explain heteroscedasticity. This routine automatically adds a column of 1 to input matrix Z (therefore Z does not have to include a constant term).

Now let

\[ \gamma'=[\log \sigma^2 \alpha'] = [ \gamma_0, \ldots, \gamma_r]. \] Then the model is simply \[ \sigma^2_i = \exp(\gamma' z_i) \]Once the full parameter vector is estimated \( \exp( \gamma_0)\) provides the estimator for \( \sigma^2 \).

The model is:

\[ y=X \times\beta+ \epsilon, \qquad \epsilon \sim N(0, \; \Sigma) \] \[ \Sigma=diag(\sigma_1^2, ..., \sigma_n^2) \qquad \sigma_i^2=\exp(z_i^T \times \gamma) \qquad var(\epsilon_i)=\sigma_i^2 \qquad i=1, ..., n \]$\beta$ = p-by-1 vector which contains regression parameters.

$\gamma$ = (r+1)-times-1 vector $\gamma_0, \ldots, \gamma_r$ (or written in MATLAB language $(\gamma(1), \ldots, \gamma(r+1))$) which contains skedastic parameters.

$X$ = n-by-p matrix containing explanatory variables in the mean equation (including the constant term if present).

$Z$ = n-by-(r+1) matrix containing the explanatory variables in the skedastic equation. $Z= (z_1^T, \ldots, z_n^T)^T$ $z_i^T=(1, z_{i,1}, \ldots, z_{i,r})$ (or written in MATLAB language $z_i=(z(1), \ldots, z(r+1)$).

REMARK1: given that the first element of $z_i$ is equal to 1 $\sigma_i^2$ can be written as

\[ \sigma_i^2 = \sigma^2 \times \exp(z_i(2:r+1)*\gamma(2:r+1)) = \exp(\gamma(1))*\exp(z_i(2:r+1)*\gamma(2:r+1)) \]that is, once the full parameter vector $\gamma$ containing the skedastic parameters is estimated $\exp( \gamma(1))$ provides the estimator for $\sigma^2$.

REMARK2: if $Z=log(X)$ then

\[ \sigma^2_i= \exp(z_i^T \times \gamma) = \prod_{j=1}^p x_{ij}^{\gamma_j} \qquad j=1, ..., p \] REMARK3: if there is just one explanatory variable (say $x =(x_1 \ldots, x_n)$) which is responsible for heteroskedasticity and the model is \[ \sigma^2_i=( \sigma^2 \times x_i^\alpha) \] then it is necessary to supply $Z$ as $Z=log(x)$. In this case, given that the program automatically adds a column of ones to $Z$ \[ \exp(Z(i,1) \times \gamma(1) +Z(i,2) \times \gamma(2))= \exp(\gamma(1))*x_i^{\gamma(2)} \] therefore the $\exp$ of the first element of vector $\gamma$ (namely exp(gamma(1))) is the estimate of $\sigma^2$ while the second element of vector $\gamma$ (namely gamma(2)) is the estimate of $\alpha$References

Greene W.H. (1987), Econometric Analysis, Prentice Hall. [5th edition, section 11.7.1 pp. 232-235, 7th edition, section 9.7.1 pp. 280-282].